Creating a Responsible AI Policy: Top 10 Things to Know

If your district wants to craft an effective and comprehensive AI policy, there’s a lot to consider

For school and district leaders facing the task of creating a responsible AI policy, understanding of the different types of AI and domain-specificity matters.

During a recent Tech & Learning webinar, Leigh Hall, Director of Research for Merlyn Mind, explored ten critical aspects essential for creating a responsible AI policy. The session covered key areas including data privacy, safety and security, training and support, student well-being, and more.

View the full webinar on demand here

Key Takeaways

The top ten things to know about creating a responsible AI policy, according to Hall:

1. Why Use AI?

Hall discussed the need to make clear the rationale for implementing AI into your district. For example, AI can lead to enhancing learning opportunities, improving digital literacy skills, increasing efficiency, and creating a more equitable and accessible learning environment.

“AI integration streamlines administrative tasks, allowing educators to focus more on teaching and student support, thereby enhancing overall productivity and job satisfaction,” said Hall. It can also help with personalized learning, providing data-driven insights, and preparing students for a future in which using AI will be necessary.

“One of the fears and concerns we hear from teachers is that critical thinking skills will go down,” she said. “But it does not have to be that way! Ideally, it’s going to inspire creativity. Students are pretty excited about these tools, and we can use these tools to expose them to what’s going on in the world.”

Tools and ideas to transform education. Sign up below.

2. Data Privacy, Safety, and Cybersecurity

When approaching AI data privacy and security, Hall recommended first outlining protocols around student data that are in compliance with local and national regulations. Then, explain how your district will adhere to ethical and safety standards, provide criteria for how and when AI will be used, and define roles and responsibilities by those associated with its use.

Hall endorsed informed consent, saying, “Transparent communication and obtaining informed consent from parents and guardians will be prioritized, ensuring that they understand how student data is used and protected.” She also recommended to protect data that districts comply with regulations, have secure storage, and have control over access.

“If you’re adopting an AI tool, you need to know who owns the data,” she said. “Do you own it, or does the company who creates the tool own it? And if they say they own the data, what does that mean?”

Providing cybersecurity training as well as having an incident response plan and continuous monitoring, are also critical.

3. Bias and Fairness

Using AI in an equitable, fair, and unbiased manner is critical, Hall said. That includes ensuring that any AI tools promote an equitable and inclusive experience, explaining if you plan on using diverse datasets, promoting training to recognize AI bias, and creating protocols to monitor it all.

Hall discussed mitigating bias in AI tools, including the importance of diverse datasets. “Ideally, diverse datasets will accurately reflect the demographics of the student population and the broader community,” she said.

4. Transparency and Accountability

Being transparent and accountable about your district’s AI use is critical, said Hall, adding the need to fully disclose how AI is being used in educational settings and to define the mechanisms for accountability.

“Providing comprehensive information about the application of AI technologies fosters transparency,” she said. “This includes sharing details about specific use cases, intended outcomes, and potential implications.”

Accountability practices should include full disclosure about systems, a dedicated reporting channel, and prompt investigation of any reports.

5. Developing A Framework for AI Procurement

Having clearly stated guidelines and protocols for adopting an AI tool is key, said Hall. When doing so, a comprehensive needs assessment should be undertaken first, followed by due diligence and a pilot test of any tool.

“Gather comprehensive feedback to understand the tool’s practical implications and user experience,” said Hall. “Analyze data on the tool’s impact and performance to make an informed decision about wider implementation.”

6. Educator Training and Support

Obviously, providing professional development is critical for the implementation of AI tools. Educators need to understand that ongoing AI PD opportunities can help build their overall digital literacy, Hall said, including best practices around ethical use.

In addition to PD programs and curated resources, a community of practice can provide additional support. “A community of practice is established to foster collaboration and knowledge sharing among educators, enabling them to stay informed about best practices and emerging trends in AI education.”

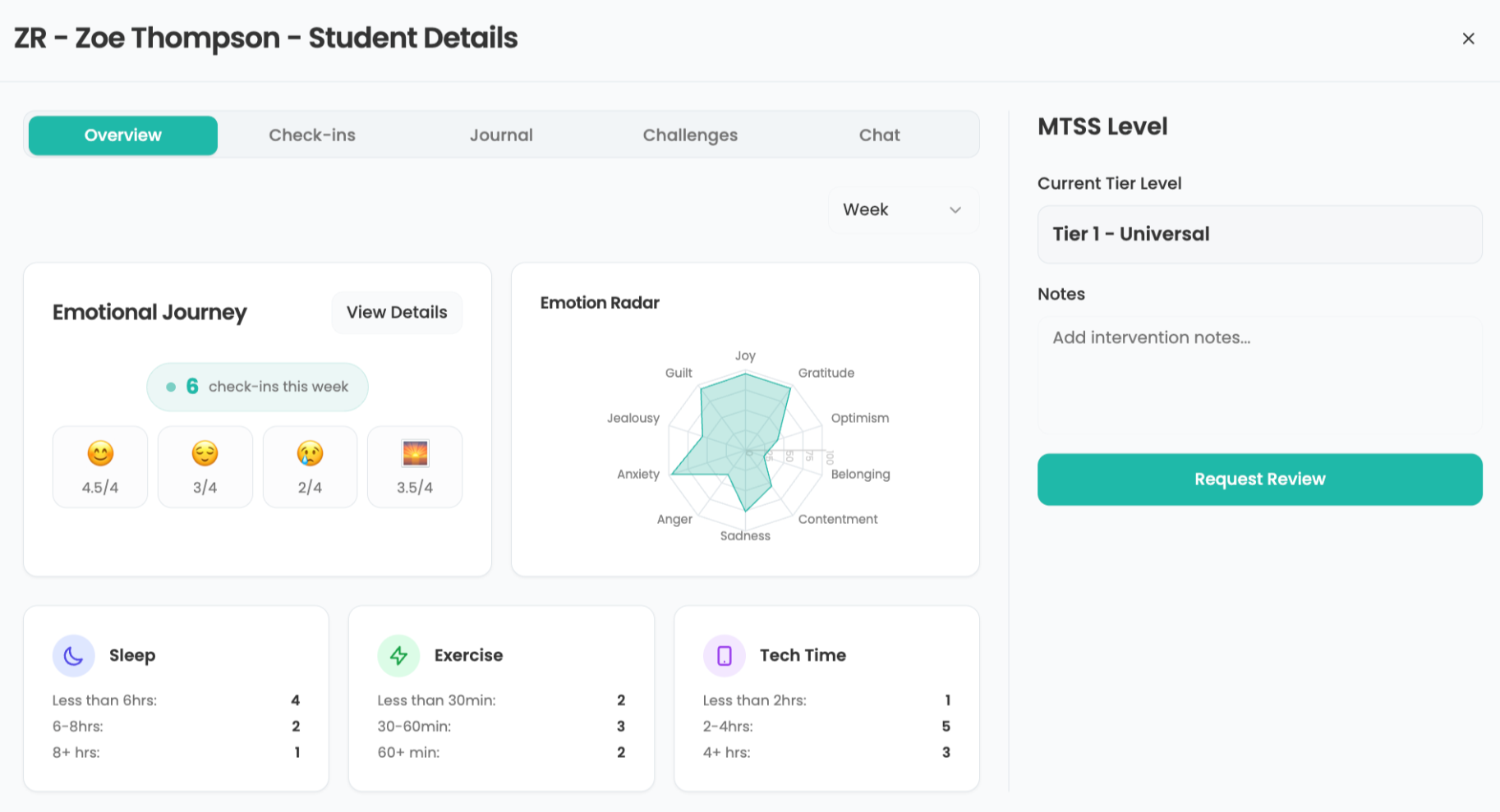

7. Student Well-Being

Student well-being and safety needs to always be a priority, said Hall. This includes creating guidelines for monitoring and addressing potential negative impacts, explaining how AI will support accessibility and be used to help all students, including those with disabilities or who may be ELLs.

It’s also important to create an AI environment in which students feel safe and empowered, and allows for parental engagement. “Engaging parents and guardians in discussions about AI technology use and safety, ensures that they are informed and involved in supporting their children’s well-being in the digital environment,” she said.

8. Parent and Community Engagement

“What you want to do first is to understand what parental concerns are?” said Hall. “Have a forum for parents and community members to come in. And we have to know the top three to five concerns, and then we can develop a strategy to address those concerns.”

In addition, you need to establish how you will explain the ongoing use of AI technology as well as develop a reliable system to receive and process parent and community feedback and concerns. This can include dedicating specific communication channels, creating opportunities for informational discussion and workshops, and collaborating with local organizations.

9. Evaluation and Continuous Improvement

As with any initiative, prepare for ongoing evaluation of any AI technology, which should include defining processes for assessing effectiveness and learning outcomes as well as using data-driven insights.

Performance metrics, feedback loops, and flexibility will all be part of the evaluation process. “Adopt an adaptive approach to AI tool implementation, allowing for iterative improvements and adjustments based on feedback and evolving educational needs,” Hall suggested.

10. Roll-Out Plans

When it’s time to actually implement AI technology, have a clear plan and timetable in place to maximize benefits and minimize disruption, Hall said. Also plan ahead for the hardware, software, and personnel necessary to support AI in your district.

“Remember, we want realistic goals, timelines, and outcomes for that AI implementation,” Hall said.

Ray Bendici is the Managing Editor of Tech & Learning and Tech & Learning University. He is an award-winning journalist/editor, with more than 20 years of experience, including a specific focus on education.