AI Chatbot Friendships: Potential Harms and Benefits For Students

Human and AI chatbot “friendships” are becoming more common. Mental health experts worry they can be harmful but also see potential if these are designed with real-world relationships in mind.

Students of all ages have begun to regularly interact with chatbots, both inside and outside of school, for a variety of reasons. Just how little is known about the impact of these interactions on young people was brought tragically to light recently after the suicide of a 14-year-old boy in Florida. The boy frequently used Character.AI, a role-playing generative AI tool, and spoke with Game of Thrones-inspired character on the app moments before he fatally shot himself. The boy’s mother has filed a lawsuit against Character.AI alleging the company is responsible for his death, The New York Times reported.

While this is an extreme case, there is potential for unhealthy interactions between chatbots and young people, says Heidi Kar, a clinical psychologist and the Distinguished Scholar for mental health, trauma, and violence initiatives at The Education Development Center (EDC), a nonprofit dedicated to improving global education, among other initiatives.

More and more people are turning to AI chatbots for friendships and even pseudo-romantic relationships. Kar isn’t surprised by why many people in general, including students, are looking for relationships with AI rather than real people since chatbots will tell them what they want to hear rather than offering hard truths. “Relationships in real life with real people, are hard, they're messy, and they don't always feel very good,” Kar says.

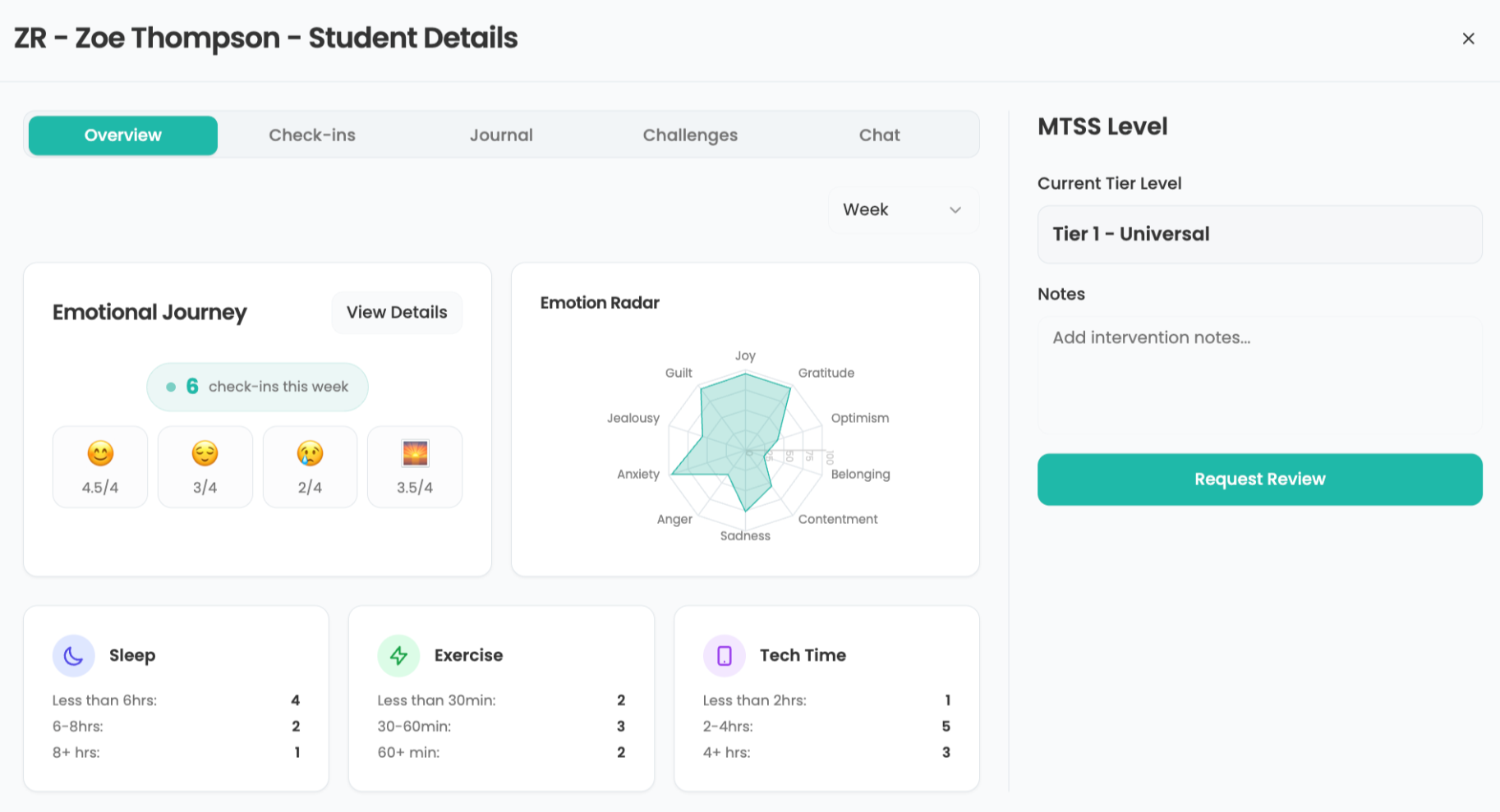

Despite this, Kar believes there are ways in which chatbots can help educate students about mental health. That’s why she has teamed up with Simon Richmond, an EDC instructional designer, to help create Mental Health for All, a digital mental health program that utilizes AI to help teach students mental health best practices. The evidence-based program is designed to provide psychology skills training for large populations of youth globally. It combines interactive audio instruction and stories, and integrates AI chatbots and peer-to-peer interaction.

Kar and Richmond share best practices from this initiative and discuss potential problems around AI chatbots and mental health, as well as some ways these might help with mental health.

Challenges With AI and Mental Health

Kar says that educators should be aware of how their students might be engaging in potentially unhealthy relationships with chatbots, but research into human chatbot relationships is limited.

“The comparable research we have to date, in my mind, in the psychology field, goes to the porn industry,” she says. “People who have turned to porn and away from actual relationships to get kind of the sexual satisfaction, have a very hard time coming back into actual relationships. It's the same idea as chatbots. The fake is easy and it can be incredibly satisfying, even if it doesn't have the same physical aspect.”

Tools and ideas to transform education. Sign up below.

Richmond says that while designing their mental health program, they made sure to avoid creating AI "yes" people that would just tell those who interact with them what they want to hear.

“There's more opportunities for personal growth when you're interacting with something that gives you hard truths, where there's friction involved,” he says. “Straight gratification does not lead to personal improvement, and so our goal with these AI characters is to provide the empathy and the interactivity and a warm relationship, but to still deliver the skills and the advice that the young people need to hear.”

Potential For AI Chatbots To Help With Mental Health Education

When programmed correctly, Kar and Richmond believe AI chatbots can provide an important outlet for students.

“There's a lot of societies around the world where mental health and emotional awareness is talked about in very different ways,” Richmond says. “So you might have rich human relationships with peers and with adults but with a culture around you that doesn't have a vocabulary to talk about emotions or to talk about different mental health challenges.”

The chatbots in Richmond and Kar's psychology program are based on characters that the students learn about and connect with. The AI is designed to provide exactly this type of outlet for students who otherwise might not be able to have conversations about mental health.

“This is a way of establishing talking to people who have a vocabulary and a set of skills that don't exist in the culture around you,” Richmond says.

Chatbot Potential At The Personal Level

Beyond assisting with a science-driven curriculum, Kar believes there’s untapped potential for using people’s relationships with AI to improve their real-world relationships.

“As opposed to AI characters out there who can create their own relationship with you, what about focusing on that AI character helping you to manage your personal relationships better,” she says.

For example, instead of an AI saying something such as, “I'm sorry. No one understands you, but I understand you,” Kar would like an AI that said things such as, “What do you think would make that better? That fight sounds tough. How are we going to get through that?”

She adds, “There's so much we know that we could program into AI personalities about human interaction and bettering your relationships and conflict resolution and how to deal with anger, but always having that string back to how are we going to address this?”

Ultimately, Kar envisions a conversation such as, “‘You've never had a girlfriend. You really want a girlfriend. What do you think some things are that can get you there?’ As opposed to the ‘I understand you. I'm here for you’ approach.”

Erik Ofgang is a Tech & Learning contributor. A journalist, author and educator, his work has appeared in The New York Times, the Washington Post, the Smithsonian, The Atlantic, and Associated Press. He currently teaches at Western Connecticut State University’s MFA program. While a staff writer at Connecticut Magazine he won a Society of Professional Journalism Award for his education reporting. He is interested in how humans learn and how technology can make that more effective.