I Tested Grammarly’s AI Writing Assistant For Teaching. I Love And Hate It

When Grammarly's AI writing assistant is working it's great, but when it's bad, it's very bad.

Not to get too philosophical but I think one beautiful thing about humans is our ability to hold two contrary viewpoints at the same time. It’s one of the aspects that makes human writing so hard for AI to duplicate well — we are creatures of contradiction and nuance, and machines struggle to get that.

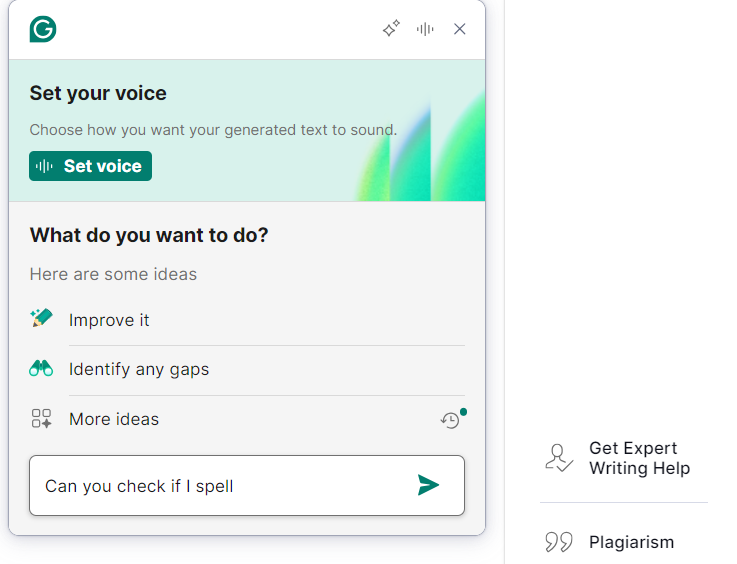

All of that is a roundabout way of explaining my feelings when it comes to Grammarly’s AI writing assistant. I’ve used Grammarly’s spellcheck for years and encourage all my students to do the same. So I was intrigued by its launch of an AI assistant in Fall 2023 and finally got around to testing it out myself.

Using the free web version that allows 100 prompts per month, I asked it to critique works of writing I created, asked it for help answering real writing prompts I use with my students, and had it respond to actual examples of student writing.

After doing this, I’m conflicted. When working at its best, Grammarly’s AI assistant comes as close as any tool I’ve used to living up to the potential of generative AI tutoring — providing supportive, thoughtful, and frequently, very good feedback on student work.

Conversely, when it misses the mark, it misses wide, confidently providing inaccurate assurances and worse making it easy to use AI to cheat. I’d argue it could provide a strong temptation to cheat even among students who turned to the tool for help without that intention initially.

Let’s take a closer look at Grammarly's AI writing assistant.

Grammarly’s AI writing assistant: The Good

Grammarly bills its AI assistant as a tool that will help the writing process by providing assistance with tasks such as brainstorming and ideation. And when it successfully sticks to this type of usage, it is, simply put: awesome.

Tools and ideas to transform education. Sign up below.

I took a story I wrote for another publication that I felt was pretty close to being ready to submit and put it in Grammarly's web-based document editor. I then asked the Grammarly assistant to make suggestions by clicking on the "assistant" and "generative AI" options on the menu. It provided several good suggestions and pointed out one real weakness in the story I was ignoring — I had not provided enough specific background info on two companies mentioned.

I also submitted parts of two real student papers written for that assignment (both submissions were partial and free of any information that could identify the students). I would have scored one of these submissions in the high 90s, and the other in the 70s. In both cases, Grammarly provided encouraging and helpful feedback. Particularly with the weaker paper, it pointed out several of the missing elements that I or another instructor would likely note when grading.

Grammarly’s AI also initially impressed me with its honesty when it couldn’t help. For instance, I asked it if it could make sure that my story was written in accordance with Associated Press-style guidelines. It politely said it could not.

This is great because the sign of a smart AI tutor — or person — is one that admits it doesn’t know everything. Unfortunately, it wasn’t always so honest about what it could and could not do.

The Bad

When I asked Grammarly’s AI assistant to confirm that I had spelled a person’s name correctly and consistently throughout the story, the AI confidently assured me I had — even though I hadn’t. I’m not sure if this was a true hallucination or if it just couldn’t quite figure out the question. Either way it wasn’t good.

More significantly, when I put in a prompt used for one of my classes and asked for help writing an essay based on this, it provided an “example” by writing the essay for me. While getting an example of an essay based upon a prompt could be helpful, this was more or less writing the essay for the student, which is exactly what ChatGPT and other AI tools do for students. I had hoped Grammarly’s AI assistant would avoid this.

Furthermore, it’s easy to imagine how a student who put in a prompt asking for help and got a complete essay instead might be tempted to just submit that essay.

The Ugly

The more I looked into these negatives, the worse it got.

Grammarly’s AI assistant insisted I had spelled one source’s name correctly even when I specifically asked it to check for the misspelling that I had intentionally placed in the story.

And in an unsettling and somewhat humbling turn of events, after putting my prompt into Grammarly’s AI assistant, I realized that my worries about some of its features were not hypothetical. For example, this week (I'm teaching a course this summer) I’m near certain that at least two of my students generated entire essays using Grammarly AI. I also believe it’s likely that many of the cases of AI-submitted work in the past that I have seen in my classes have been generated with Grammarly’s AI assistant. This is particularly unsettling as I had advised all students to use Grammarly. Did I inadvertently push them to the dark side?

To be sure, some of these problems are surmountable. For school accounts, perhaps Grammarly’s AI assistant could send copies of the examples generated for students to instructors. Or better yet, it could be programmed not to generate examples of full essays. AI engineers could work more closely with writing instructors to help address common needs and avoid hallucinations.

Other Grammarly features have long impressed me, so I am confident Grammarly will make improvements going forward. I hope this happens soon because if you eliminate this tool’s problematic features, you'd be left with something that really lives up to the educational promise of generative AI.

Erik Ofgang is a Tech & Learning contributor. A journalist, author and educator, his work has appeared in The New York Times, the Washington Post, the Smithsonian, The Atlantic, and Associated Press. He currently teaches at Western Connecticut State University’s MFA program. While a staff writer at Connecticut Magazine he won a Society of Professional Journalism Award for his education reporting. He is interested in how humans learn and how technology can make that more effective.